The use of LLMs is becoming more and more widespread, and their text generation capabilities are improving all the time. Nevertheless, they certainly have some limitations. For example, as we saw in article XXX, reasoning about mathematical problems is limited and in many cases you produce incorrect results. We have seen, however, how it is possible to overcome these limitations by acting only on the prompt instead of retraining a model. This allows us to optimize the result without investing in additional computational resources, but relying on what we already have. In article XXX we introduced some techniques such as few-shot and chain-of-thought. Below we will look at other prompt engineering techniques that can be effective for some tasks.

Self-Consistency

The self-consistency technique is based on the idea of sampling multiple and diverse reasoning paths across the few-shots CoT and using generations to select the most consistent response (Wang et al. (2022). This helps improve the performance of the CoT query on tasks involving arithmetic and common sense reasoning.

Let us try the following example of arithmetic reasoning:

Q: There are 15 trees in the grove. Grove workers will plant trees in the grove today. After they are done,

there will be 21 trees. How many trees did the grove workers plant today?

A: We start with 15 trees. Later we have 21 trees. The difference must be the number of trees they planted.

So, they must have planted 21 - 15 = 6 trees. The answer is 6.

Q: If there are 3 cars in the parking lot and 2 more cars arrive, how many cars are in the parking lot?

A: There are 3 cars in the parking lot already. 2 more arrive. Now there are 3 + 2 = 5 cars. The answer is 5.

Q: Leah had 32 chocolates and her sister had 42. If they ate 35, how many pieces do they have left in total?

A: Leah had 32 chocolates and Leah’s sister had 42. That means there were originally 32 + 42 = 74

chocolates. 35 have been eaten. So in total they still have 74 - 35 = 39 chocolates. The answer is 39.

Q: Jason had 20 lollipops. He gave Denny some lollipops. Now Jason has 12 lollipops. How many lollipops

did Jason give to Denny?

A: Jason had 20 lollipops. Since he only has 12 now, he must have given the rest to Denny. The number of

lollipops he has given to Denny must have been 20 - 12 = 8 lollipops. The answer is 8.

Q: Shawn has five toys. For Christmas, he got two toys each from his mom and dad. How many toys does

he have now?

A: He has 5 toys. He got 2 from mom, so after that he has 5 + 2 = 7 toys. Then he got 2 more from dad, so

in total he has 7 + 2 = 9 toys. The answer is 9.

Q: There were nine computers in the server room. Five more computers were installed each day, from

monday to thursday. How many computers are now in the server room?

A: There are 4 days from monday to thursday. 5 computers were added each day. That means in total 4 * 5 =

20 computers were added. There were 9 computers in the beginning, so now there are 9 + 20 = 29 computers.

The answer is 29.

Q: Michael had 58 golf balls. On tuesday, he lost 23 golf balls. On wednesday, he lost 2 more. How many

golf balls did he have at the end of wednesday?

A: Michael initially had 58 balls. He lost 23 on Tuesday, so after that he has 58 - 23 = 35 balls. On

Wednesday he lost 2 more so now he has 35 - 2 = 33 balls. The answer is 33.

Q: Olivia has $23. She bought five bagels for $3 each. How much money does she have left?

A: She bought 5 bagels for $3 each. This means she spent $15. She has $8 left.

Q: When I was 6 my sister was half my age. Now I’m 70 how old is my sister?

A:

Output 1

If when you were 6, your sister was half your age, that means she was 3 years old at the time. Since then, the age gap between you two remains constant, so she will always be 3 years younger than you. Therefore, if you are now 70 years old, your sister would be 67 years old.

Output 2

When you were 6, your sister was half your age, which means she was 3 years old at that time. The age gap between you and your sister is 6 - 3 = 3 years. So, when you are now 70 years old, your sister would be 70 - 3 = 67 years old.

The outputs we obtained from ChatGPT, although in different forms, produced the same end result. In the original article, we point out that in some cases the LLM used could give wrong answers. This happened even to the simple application of the direct question. In fact, if we submit the following question

When I was 6 my sister was half my age. Now

I’m 70 how old is my sister?

ChatGPT’s response will be as follows.

When you were 6, your sister was half your age, which means she was 3 years old (half of 6).

Now, if you're 70 years old, and assuming your sister is still alive, she would be 67 years old, because the age difference between you two remains the same.

This shows that OpenAI’s LLM has improved significantly in the last period. In fact, the article by Wang et al. (2022) pointed out that the response that was obtained was instead this:

35

The answer is obviously wrong. The technique seen above was intended to overcome this limitation, which, however, could be overcome on certain tasks and/or with different LLMs.

Prompt Generated Knowledge

LLMs continue to be improved. One widely used technique includes the ability to incorporate knowledge or information to help the model make more accurate predictions.

Using a similar idea, can the model also be used to generate knowledge before making a prediction? That is what is proposed in the Liu et al. 2022 article: generating knowledge for use as part of the prompt. In particular, how useful is it for tasks such as full sense reasoning?

Let’s try a simple prompt:

Part of golf is trying to get a higher point total than others. Yes or No?

For some LLMs, the answer may be Yes. This type of error reveals the limitations of some LLMs in performing tasks that require more knowledge of the world. However, if you try ChatGPT the answer is as follows:

No, that statement is incorrect. In golf, the objective is to complete the course in as few strokes as possible, not to achieve a higher point total. The ultimate goal is to have the lowest score, not the highest. Each stroke is counted, and the player with the fewest strokes at the end of the round is the winner.

In case the LLM used has gaps in knowledge about a given context, how can we improve this with knowledge generation? First, we generate some “knowledge.”

Input: Greece is larger than mexico.

Knowledge: Greece is approximately 131,957 sq km, while Mexico is approximately 1,964,375 sq km, making Mexico 1,389% larger than Greece.

Input: Glasses always fog up.

Knowledge: Condensation occurs on eyeglass lenses when water vapor from your sweat, breath, and ambient humidity lands on a cold surface, cools, and then changes into tiny drops of liquid, forming a film that you see as fog. Your lenses will be relatively cool compared to your breath, especially when the outside air is cold.

Input: A fish is capable of thinking.

Knowledge: Fish are more intelligent than they appear. In many areas, such as memory, their cognitive powers match or exceed those of ’higher’ vertebrates including non-human primates. Fish’s long-term memories help them keep track of complex social relationships.

Input: A common effect of smoking lots of cigarettes in one’s lifetime is a higher than normal chance of getting lung cancer.

Knowledge: Those who consistently averaged less than one cigarette per day over their lifetime had nine times the risk of dying from lung cancer than never smokers. Among people who smoked between one and 10 cigarettes per day, the risk of dying from lung cancer was nearly 12 times higher than that of never smokers.

Input: A rock is the same size as a pebble.

Knowledge: A pebble is a clast of rock with a particle size of 4 to 64 millimetres based on the Udden-Wentworth scale of sedimentology. Pebbles are generally considered larger than granules (2 to 4 millimetres diameter) and smaller than cobbles (64 to 256 millimetres diameter).

Input: Part of golf is trying to get a higher point total than others.

Knowledge:

The answers provided by Liu et al (2022) are as follows.

Output 1:

The objective of golf is to play a set of holes in the least number of strokes. A round of golf typically consists of 18 holes. Each hole is played once in the round on a standard golf course. Each stroke is counted as one point, and the total number of strokes is used to determine the winner of the game.

Output 2:

Golf is a precision club-and-ball sport in which competing players (or golfers) use many types of clubs to hit balls into a series of holes on a course using the fewest number of strokes. The goal is to complete the course with the lowest score, which is calculated by adding up the total number of strokes taken on each hole. The player with the lowest score wins the game.

At this point, the authors suggest integrating knowledge and obtaining a prediction. We reformat the question in D/R format to guide the response format.

Question: Part of golf is trying to get a higher point total than others. Yes or No?

Knowledge: The objective of golf is to play a set of holes in the least number of strokes. A round of golf typically consists of 18 holes. Each hole is played once in the round on a standard golf course. Each stroke is counted as one point, and the total number of strokes is used to determine the winner of the game.

Explain and Answer:

In the paper we get different answers based on the LLM used. Some are correct because the model is more confident about the information provided. In other cases, however, the answer continues to be wrong. But how does ChatGPT perform with respect to the original question? This is its answer.

No, that statement is incorrect. In golf, the objective is to complete the course in as few strokes as possible, not to achieve a higher point total. The ultimate goal is to have the lowest score, not the highest. Each stroke is counted, and the player with the fewest strokes at the end of the round is the winner.

As can be seen, the model proposed by ChatGPT has been trained on a large amount of data that allows it to respond correctly even in some contexts that are more niche and/or different from the usual trend. Nevertheless, the technique of providing knowledge directly from the prompt can come to the rescue on certain tasks and contexts.

Tree of Thoughts (ToT)

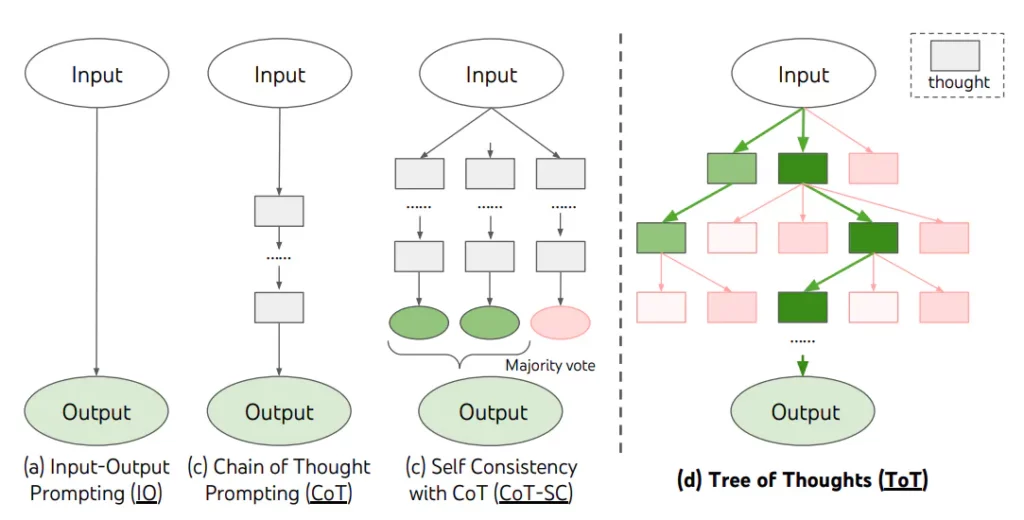

As we have seen in this and previous articles, traditional prompting techniques are inefficient for more complex tasks where, for example, more strategy or exploration of multiple alternatives is required. Yao et el. (2023) and Long (2023) proposed Tree of Thoughts (ToT), a generalization of the chain-of-thought prompting technique that encourages the exploration of multiple “thoughts” that are used as intermediate steps to perform problem solving with Language Models (LMs).

The ToT technique creates a tree of thoughts, as shown in the figure, where the thoughts are linguistic sequences that represent the steps to achieve problem solving. This approach allows an LM to evaluate its own intermediate progress toward solving the problem. The LM’s ability to generate and evaluate “thoughts” is combined with search algorithms (e.g., breadth-first search and depth-first search), so that thoughts are explored with lookahead and backtracking.

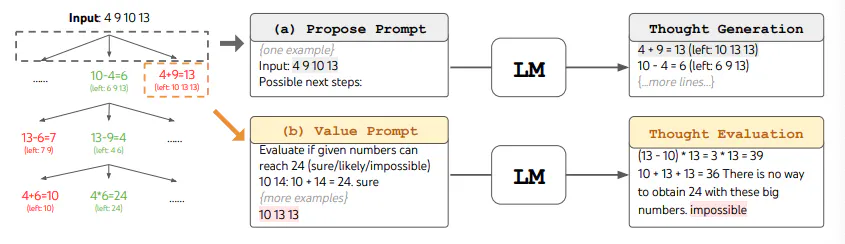

When using the ToT technique, it is necessary to define the number of candidate thoughts (the most promising ones) and the number of necessary steps the LM has to perform to reach the solution. In the paper by Yao et el. (2023), the Game of 24 is used as a mathematical reasoning task that requires a decomposition into 3 steps, each having an intermediate equation. At each step, the best b=5 candidates are saved.

To perform a BFS in the ToT technique for the Game of 24, each candidate step is evaluated according to the possibility of reaching the number 24 through the proposed mathematical operation. Each is assigned a label between “sure/maybe/impossible.” As stated by the authors, the aim is to promote correct partial solutions, which can be verified by looking forward a few steps, eliminate impossible partial solutions based, for example, on the size of the number “the number is too small/large to reach 24 in the next steps,” and keep the rest, those labeled “maybe.” Values are sampled 3 times for each step.

The results reported by the authors show that this technique is successful compared to those we have seen before. Of course, it requires developing ad-hoc code to both submit queries to the model and evaluate the various responses. An example can be found here.

But what if we want to apply this technique using only a text prompt? Hulbert (2023) proposed a possible prompt structure to answer this question.

An example is as follows:

Imagine three different experts are answering this question.

All experts will write down 1 step of their thinking,

then share it with the group.

Then all experts will go on to the next step, etc.

If any expert realises they're wrong at any point then they leave.

The question is...