In the previous articles, Prompt engineering and LLM: Prompt Examples, we introduced both the figure of the prompt engineer and practical examples. Through the examples we were able to see how the structure of the prompt can influence the final result significantly. This is because the words, or rather the tokens, generated depend on a conditional probability of what was seen above. This implies that what is provided by the prompt will significantly influence what is subsequently generated in the cascade. Many research works have focused more on how to construct prompts based on the desired output, than on optimizing by fine tuning the starting model. In this paper we will look at some techniques that have been proposed in recent years. Let us start.

Zero-Shot Prompt

Because LLMs have been trained on huge amounts of data, these models can perform “zero-shot” operations, that is, without the need to retrain the model for the context of interest. In the article LLM: Prompt Examples, we have already seen this technique applied, such as for text classification based on expressed sentiment. Below is one of the previously seen examples.

Classify the text into neutral, negative or positive.

Text: I think the food was good.

Sentiment:

Output

Sentiment: Positive

In some cases, unfortunately, this technique does not work. If this happens you must use the next technique, which is the few-shot prompt.

Prompt Few-Shot

The few-shot prompt can be used as a technique to enable context-driven learning. Demonstrations are provided directly in the prompt to “guide” the model to better performance. The examples provided are used to condition and define the output for what will be submitted later.

Let us see the few-shot prompt in action using the example reported by Brown et al. 2020. In the example, the task is to correctly use a new word in a sentence.

A "whatpu" is a small, furry animal native to Tanzania. An example of a sentence that uses

the word whatpu is:

We were traveling in Africa and we saw these very cute whatpus.

To do a "farduddle" means to jump up and down really fast. An example of a sentence that uses

the word farduddle is:

Output

We were so excited to see the band play that we couldn't help but farduddle in the front row.

We can observe that the model has somehow learned to perform the task by providing it with only one example (in this case we talk about 1-shot). For more difficult tasks, we can increase the number of examples provided in the prompt.

Based on the results presented by Min et al. (2022), here is some more advice on how to provide examples when taking a few shots:

- “the label space and the distribution of input text specified by the demonstrations are both important (regardless of whether the labels are correct for individual inputs).”

- the format used plays a key role in performance-even if you use only random labels, it is much better than no labels at all.

- selection of random labels from a true distribution of labels helps more than a uniform distribution.

Let us look at some examples to better understand these recommendations. Let us first start an example with random labels (i.e., the Negative and Positive labels randomly assigned to the examples):

This is awesome! // Negative

This is bad! // Positive

Wow that movie was rad! // Positive

What a horrible show! //

Output

It seems like there might be a mix-up in your statements. Let me help clarify:

1. "This is awesome!" - Positive

2. "This is bad!" - Negative

3. "Wow that movie was rad!" - Positive

4. "What a horrible show!" - Negative

So, in this corrected version:

1. Positive

2. Negative

3. Positive

4. Negative

Not only do you get the correct answer, even though the labels were randomly generated, but ChatGPT also corrects those provided. Note that we also kept the format, which helps. The experiment we conducted shows that the latest model of ChatGPT (September 2023) is much more robust even against random data. Example:

Positive This is awesome!

This is bad! Negative

Wow that movie was rad!

Positive

What a horrible show! –

Output

Negative

There is no consistency in the format provided, but the model still provided the correct label.

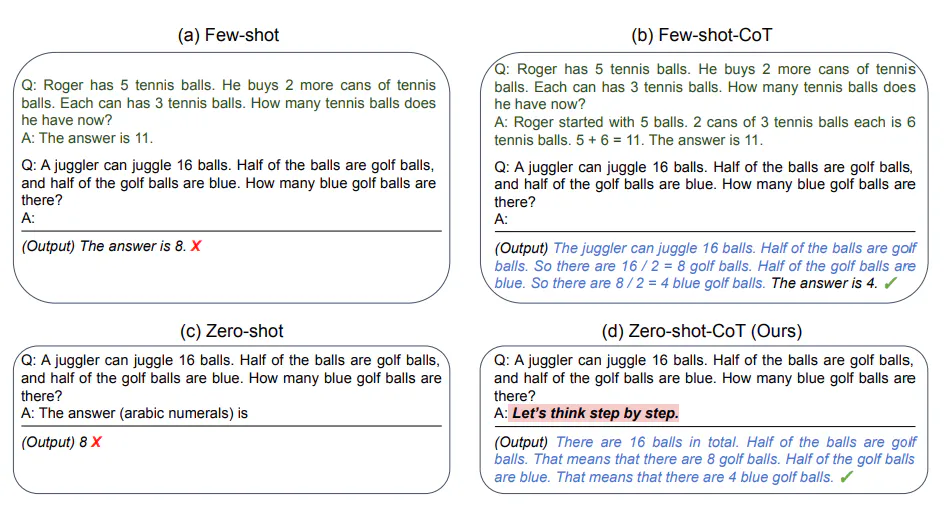

Limitations of a Few-shot Prompting

The standard few-shot prompt works well for many tasks, but it is still not a perfect technique, especially when it comes to more complex reasoning tasks. We demonstrate why this is the case.

The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

A: The answer is False.

The odd numbers in this group add up to an even number: 17, 10, 19, 4, 8, 12, 24.

A: The answer is True.

The odd numbers in this group add up to an even number: 16, 11, 14, 4, 8, 13, 24.

A: The answer is True.

The odd numbers in this group add up to an even number: 17, 9, 10, 12, 13, 4, 2.

A: The answer is False.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.

A:

Output

The answer is True.

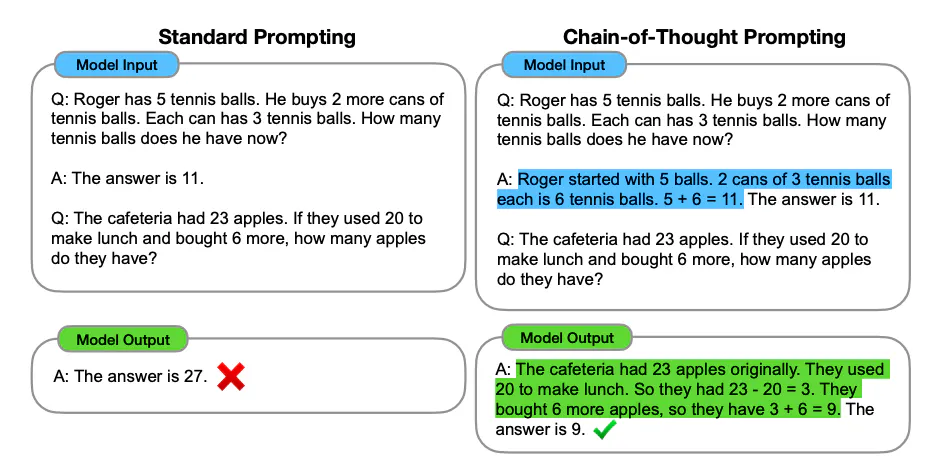

The answer is wrong. In this case a few-shot prompt does not seem sufficient to obtain reliable answers for this type of reasoning problem. The above example provides basic information about the task. However, the type of task we have required involves some more complex reasoning steps. In other words, it might be useful to decompose the problem into steps and prove it to the model. More recently, chain-of-thought (CoT) has been introduced to address more complex tasks that require arithmetic, common sense and/or symbolic reasoning.

However, these tasks sometimes manage to be solved correctly without the need to use the prompt few-shot. We apply the zero-shot technique to the previous prompt.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.

A:

Output

The odd numbers in the given group are 15, 5, 13, 7, and 1. If we add them up, we get:

15 + 5 + 13 + 7 + 1 = 41

So, the sum of the odd numbers in this group is 41, which is an odd number. The statement "The odd numbers in this group add up to an even number" is not correct based on the provided numbers.

In this case ChatGPT succeeds in splitting the problem and providing the correct answer with attached justification.

In general, it seems that providing examples is helpful in solving some tasks when zero-shot does not work. When both the zero-shot and few-shot prompts are not sufficient, it could mean that what is learned from the model is not sufficient to perform the required task well. Solutions at this point could be to use fine-tuning of the model or to use more advanced techniques. Below we will discuss one of the most popular prompting techniques, called chain-of-thought prompting, which has gained much popularity (the article has more than 1400 citations to date).

Prompt Chain-of-Thought

Introduced in Wei et al. (2022), the chain-of-thoughts (CoT) prompt enables complex reasoning skills through intermediate stages of reasoning. It can be combined with the few-shot prompt to achieve better results on more complex tasks that require reasoning before responding.

Let’s see an example.

The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

A: Adding all the odd numbers (9, 15, 1) gives 25. The answer is False.

The odd numbers in this group add up to an even number: 17, 10, 19, 4, 8, 12, 24.

A: Adding all the odd numbers (17, 19) gives 36. The answer is True.

The odd numbers in this group add up to an even number: 16, 11, 14, 4, 8, 13, 24.

A: Adding all the odd numbers (11, 13) gives 24. The answer is True.

The odd numbers in this group add up to an even number: 17, 9, 10, 12, 13, 4, 2.

A: Adding all the odd numbers (17, 9, 13) gives 39. The answer is False.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.

A:

Output

Adding all the odd numbers (15, 5, 13, 7, 1) gives 41. The answer is False.

Perfect! As we can see, using the few shot prompt in which the reasoning to be performed is also provided, the model succeeds in solving a new task without error. Let’s try to see if providing fewer examples makes the technique work.

The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

A: Adding all the odd numbers (9, 15, 1) gives 25. The answer is False.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.

A:

Output

Adding all the odd numbers (15, 5, 13, 7, 1) gives 41. The answer is False.

It works in this case as well!

Attention

The authors assert that this is an emergent capability that manifests itself with sufficiently large language patterns such as ChatGPT.

Zero-shot COT Prompting

Un’idea emersa più di recente è quella della zero-shot CoT (Kojima et al. 2022) che consiste essenzialmente nell’aggiungere ” Let’s think step by step ” al prompt originale.

Let us try a simple algebra problem and see how the model behaves without the application of any technique:

I went to the market and bought 10 apples. I gave 2 apples to the neighbor and 2 to the repairman. I then went and bought 5 more apples and ate 1. How many apples did I remain with?

Output

You started with 10 apples, gave away 2, and then bought 5 more. This means you had 10 - 2 + 5 = 13 apples. After eating one, you remained with 13 - 1 = 12 apples.

The answer is wrong! Let us then try the zero-shot COT technique.

I went to the market and bought 10 apples. I gave 2 apples to the neighbor and 2 to the repairman. I then went and bought 5 more apples and ate 1. How many apples did I remain with?

Let's think step by step.

Output

Sure! Let's break it down step by step:

1. You started with 10 apples.

2. You gave 2 apples to the neighbor, so you had 10 - 2 = 8 apples remaining.

3. You also gave 2 apples to the repairman, so you had 8 - 2 = 6 apples remaining.

4. After that, you went and bought 5 more apples, which means you now have 6 + 5 = 11 apples.

5. You ate 1 apple, so now you have 11 - 1 = 10 apples remaining.

So, you currently have 10 apples.

The result is correct! Just by adding a small instruction to the prompt we got both the step-by-step explanation of the procedure and the correct answer. This shows that this technique can be especially useful when you do not have many examples to use in the prompt.