LLM (Large Language Models) are the basis of artificial intelligence engines. We have already addressed some of the prompt techniques for getting the desired answers based on the task at hand. However, this discipline is constantly evolving as the models themselves are continuously updated. Therefore, in this article we will look at new concepts that have come out in the last period to design a well-structured prompt and what types of prompts allow LLMs to generate the desired response.

What is prompt engineering?

Prompt engineering is not strictly an engineering discipline or a precise science supported by mathematical and scientific foundations. It is defined as “the process [and practice] of structuring text that can be interpreted and understood by a generative model of artificial intelligence.” It is, therefore, a practice with a set of guidelines for creating accurate, concise and creative texts to instruct an LLM to perform a task. Another way of putting it is that “prompt engineering is the art of communicating with a large generative language model.“

To communicate with LLMs with precise and task-specific instructions, Bsharat et.al present comprehensive instructions and guidelines to improve the quality of prompts for LLMs. The study suggests that since LLMs show impressive ability and quality in understanding natural language and performing tasks in various domains, such as answering questions, mathematical reasoning, generating code, translating and summarizing tasks, and so on, a principled approach to curating various types of prompts and techniques immensely improves the response generated.

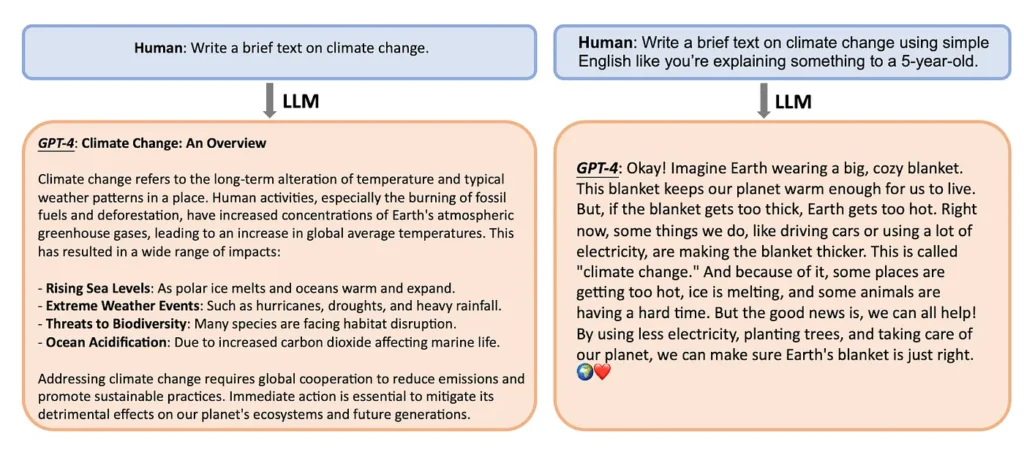

Take this simple example of a prompt before and after the application of one of the principles of conciseness and precision in the prompt. The difference in the response suggests both readability and simplicity and the desired target for the response.

As can be inferred from the above example, the more concise and precise the prompt, the better LLM understands the task at hand and, consequently, formulates a better response.

Let us examine some principles, techniques and types of prompts that provide a better understanding of how to perform a task in various domains of natural language processing.

Principles and guides of the prompt

Bsharat et al. listed 26 ordered prompt principles, which can be further classified into five distinct categories, as illustrated in the figure below.

The principles can be categorized as follows:

- Structure and clarity of the prompt: Integrate the intended audience into the prompt.

- Specificity and information: Implement prompts guided by examples (use few-shot prompts).

- User interaction and involvement: Allow the model to ask for specific details and requirements until it has enough information to provide the necessary response.

- Content and language style: Instruct the tone and style of the response.

- Complex tasks and coding prompts: Break down complex tasks into a sequence of simpler steps in the form of prompts.

Similarly, Elvis Saravia‘s prompt design guide states that a prompt can contain many elements:

- Instruction: describes a specific task that you want the model to perform.

- Context: additional information or context that can guide the model’s response.

- Input data: expressed as input or questions to be answered by the model.

- Output format and style: the type or format of the output, such as JSON, how many lines or paragraphs. Prompts are associated with roles, and roles inform an LLM about who is interacting with it and what the interactive behavior should be. For example, a system prompt tells an LLM to assume the role of Assistant or Teacher.

A user assumes the role of providing any of the above in the prompt for the LLM to use to respond. Saravia, like the study by Bsharat et.al., states that prompt engineering is a precise art of communication. In other words, to get the best response, the prompt must be designed and crafted to be precise, simple, and specific. The more concise and precise it is, the better the response will be.

OpenAI’s guide on prompt engineering also provides similar authoritative advice, with similar guidance and demonstrable examples:

- Write clear instructions

- Provide reference text

- Divide complex tasks into simpler subtasks

- Give the model time to “think”

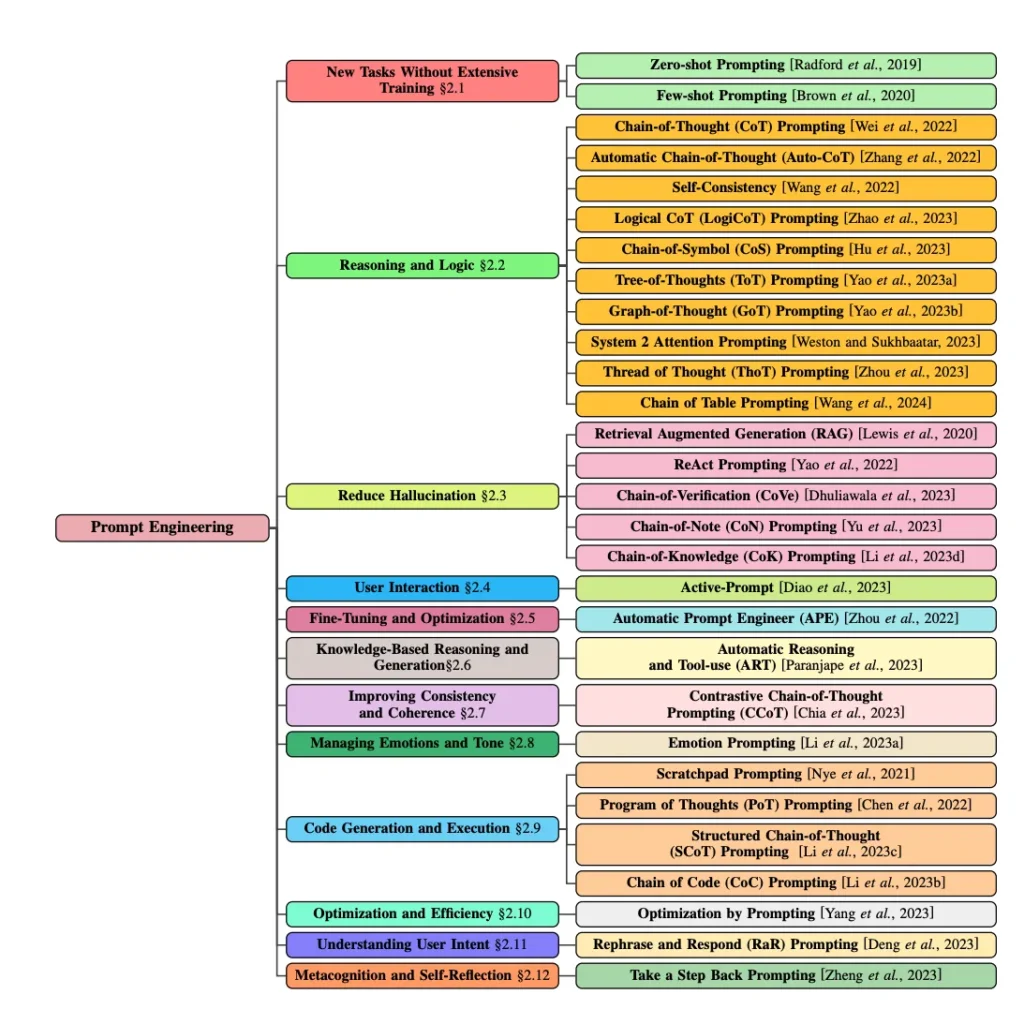

Finally, Sahoo, Singh and Saha et.al propose a systematic survey of prompt engineering techniques and offer a concise “overview of the evolution of prompting techniques, ranging from zero-shot prompting to the latest advances.” They fall into distinct categories, as shown in the figure below.

CO-STAR Prompt Framework

But the CO-STAR framework goes a step further. It simplifies all the above guidelines and principles into a practical approach.

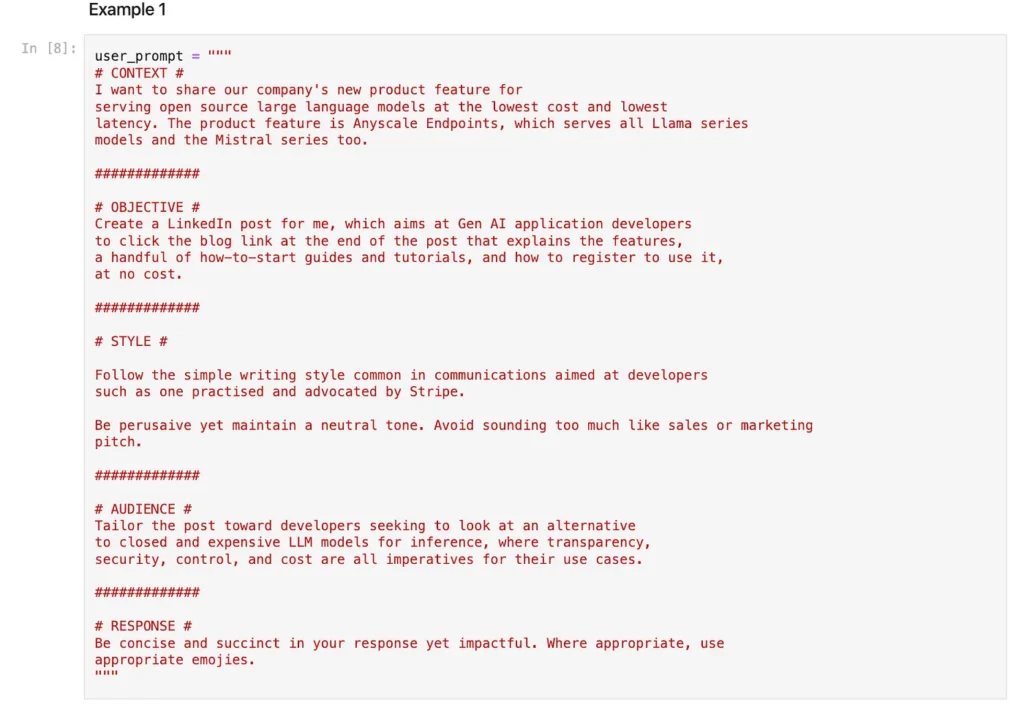

In her prompt engineering blog that won Singapore’s GPT-4 prompt engineering competition, Sheila Teo offers a practical strategy and valuable insights on how to get the best results from LLM using the CO-STAR framework.

In a nutshell, Teo condenses the principles of Bsharat et.al, along with other principles mentioned above, into six simple and digestible terms such as CO-STAR:

- C: Context: Provides information about the context and the task

- O: Objective: Define the task you want the LLM to perform

- S: Style: Specify the writing style you want the LLM to use

- T: Tone: Set the attitude and tone of the response

- A: Audience: Identify the recipient of the response

- R: Response: Provide the format and style of the response

Here is a simple example of a CO-STAR request:

With this CO-STAR prompt, we get the following concise response from our LLM.

You can see extensive examples in these two Colab notebooks:

In addition to CO-STAR, other ChatGPT-specific frameworks have been proposed, but the core of creating effective prompts remains the same: clarity, specificity, context, goal, task, action, etc.

Prompt types and tasks

So far we have explored best practices, guiding principles and techniques, from a variety of sources, on how to create a concise prompt for interacting with an LLM. Prompts are task-related, which means that the type of task you want the LLM to perform equates to a type of prompt and how you should create it.

Saravia analyzes various task-related prompts and recommends creating effective prompts to accomplish these tasks. In general, these tasks include

- Text summarization

- Zero- and few-shot learning

- Extraction of information

- Answering questions

- Text and image classification

- Conversation

- Reasoning

- Code generation

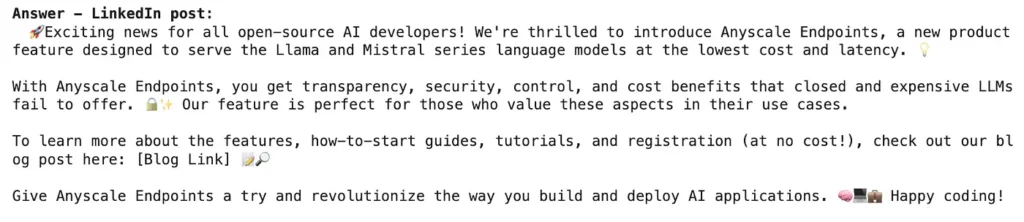

In this article we will not go through all types of prompts according to the required accomplishment. I will point you here to the collection of notebooks for Colab where you can find illustrative examples. Note that all notebook examples were tested using the OpenAI API on GPT-4-turbo, Llama 2 series models, Mixtral series, Code Llama 70B (running on Endpoint Anyscale). To run these examples, you must have accounts on OpenAI and Ansyscale Endpoints. These examples are derived from a subset of GenAI Cookbook GitHub Repository.