In the article Data lakes: GCP solutions, we talked about data lakes as a tool for storing raw data that will later be processed for storage in data warehouses. Among the various solutions offered by Google’s cloud platform isCloud Storage.

Google Cloud Storage is the essential storage service for working with data, especially unstructured data. Let’s explore why Google Cloud Storage is a popular choice for deploying a data lake. Data and Cloud Storage persist beyond the life of virtual machines or clusters in GCP. It is persistent and is also relatively inexpensive compared to the cost of dedicated compute machines. So, for example, it may be more beneficial to cache the results of previous calculations within cloud storage. Or, if you don’t need an application running all the time, it might be useful to save the state of the application in cloud storage, and then turn off the machine that is running or when you don’t need it.

Cloud Storage Features

Cloud storage is an object store, meaning that it only stores and retrieves binary objects without regard to what data is in them. However, to some extent, it also provides file system compatibility and can make objects look and function as if they were files. Therefore, you can copy files in and out of it. Data stored in cloud storage will basically stay there forever, which means it is durable, but is available instantly or is highly consistent.

The data can be encrypted, made proven or shared as needed. Being a global service, you can reach the data from anywhere. Notwithstanding, data can also be kept in a single geographic location if there are certain functional constraints of the use case. Finally, the data is served with moderate latency and high throughput.

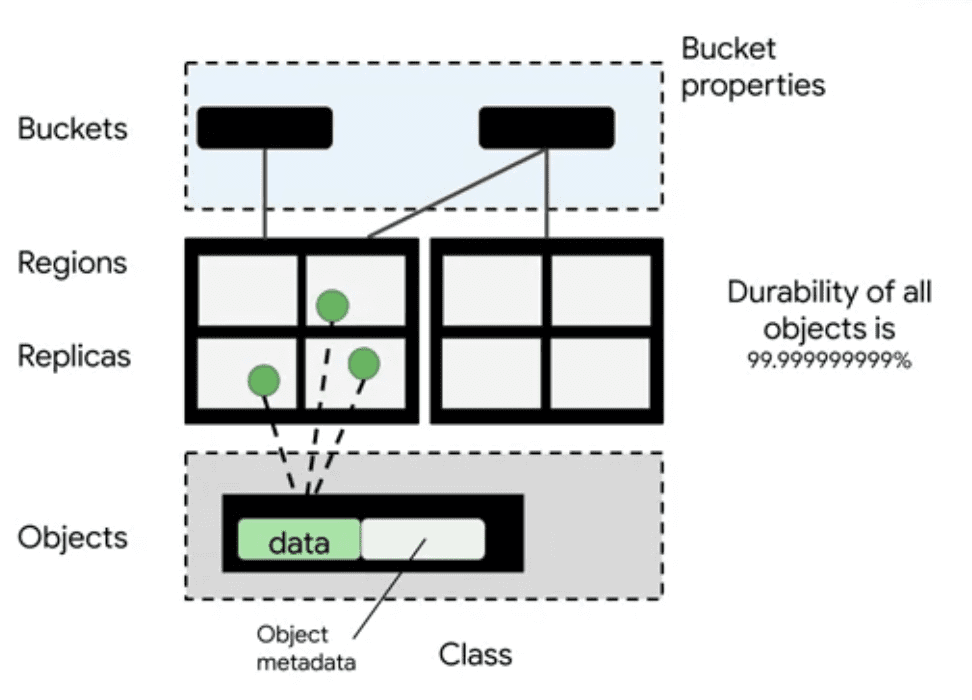

The two main entities in cloud storage are buckets and objects. Buckets are containers within which objects exist. Buckets are identified by a globally unique namespace. This means that when a name is assigned to a bucket, it cannot be used by anyone else until that bucket is deleted and, consequently, that name is released. Having a global namespace for buckets greatly simplifies the localization of a particular bucket. In fact, when defining a bucket, you need to specify the region or regions in which it will reside. Choosing a region close to where the data will be processed will reduce latency.

All objects in a bucket are replicated. This implies almost 100% durability since when a replica is lost or corrupted cloud storage will be able to replace it with a copy. Moreover, the object is always served by the replica closest to the requester reducing latency and increasing throughput. This strategy is applied for both regional and multi-region buckets.

Finally, cloud storage also stores the metadata associated with each individual object. Additional cloud storage functions use metadata for purposes such as access control, compression, encryption, and object and bucket lifecycle management. For example, cloud storage knows when an object has been stored thanks to metadata and, therefore, can automatically set it to be deleted after a specified period of time.

Creating a bucket: how to configure it

Creating a bucket can be done either via Google Cloud GUI or from the command line with gsutil. Regardless of the mode used, several decisions need to be made when creating a bucket.

The first is the location of the bucket, which cannot be changed after its creation. If there is a need to move a bucket later, you will have to copy all the content to the new location and pay the exit fees. So the choice must be wise.

The location could be a single region (e.g. Northern Europe or South Asia), or over multiple regions, such as EU or Asia, which means that the object is replicated to different regions within the EU or Asia respectively. The third option is dual region. For example, North America for means that the object is replicated in the central United States and the eastern United States.

So, how do you choose a region? It depends on the application context. If, for example, all your computation and all your users are in Asia, you can choose an Asian region to reduce network latency.

But beyond that, how do you choose between Asia South one and Asia multi-region? If you select a single region, your data will be replicated to multiple zones within that region. This way, a single zone may not be reachable, but you will still have access to the data. In fact, different zones within the same region provide isolation from most failures both infrastructural and software. Obviously, if the entire region is unreachable due to a natural disaster, you won’t be able to access that data. If you want to make sure that the data is always available even during a natural disaster, you should select multi-region or dual-region. In this case, replicas will be stored in physically separate data centers.

Third, you need to determine how often you need to access or modify the data. In fact, the cost of the bucket depends on how often you access it. You can get significant discounts if you access the data no more than once a month or once a quarter. But in what cases is this possible? Some examples are data archiving, backups or disaster recovery.

File system simulation

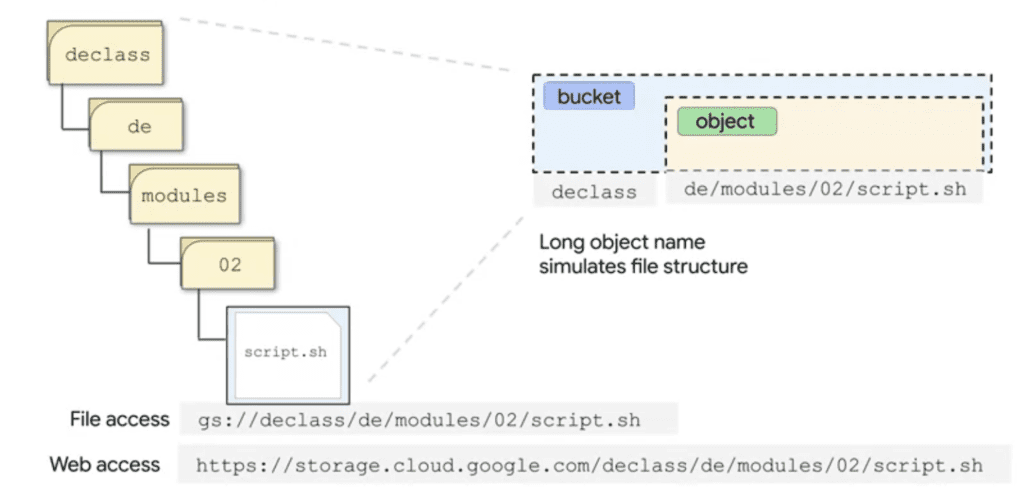

Cloud storage uses the bucket name and object name to simulate a file system. Here’s how it works.

The bucket name is the first term of the URI to which the object name is added after the /. The object name can contain the / character repeated several times. In this way a system of file and folder paths is simulated even if in reality it is only the name of a single object. In the example shown the bucket name is declass and the object name is de/modules/02/script.sh. If this path were on a file system, it would look like a series of nested directories starting with declass.

For all practical purposes, Google Cloud Storage works like a file system, but there are some key differences. For example, imagine you wanted to move all the files in directory 02 to directory 03 within the modules directory. In a file system, you would have an actual directory structure and you would simply modify the metadata of the file system. Therefore, the entire move results in an atomic operation. In an object store that simulates a file system, on the other hand, you would have to search through all the objects in the bucket for the names that have 02 in the right position in the name. Then you would have to modify each object name and rename it using 03. This would apparently produce the same result as moving files between directories. However, instead of working with a dozen files in a directory, the system would have to search through thousands of objects in the bucket to locate the ones with the right names and then change each one. So the performance is a little different.

The time it takes to move objects from directory 02 to directory 03 can be much larger depending on how many other objects are stored in that bucket. Also, during the move there will be inconsistency in the list with some files in the old directory and some in the new directory. A good practice is to avoid using sensitive information as part of the bucket names, because the bucket names are in a global namespace.

Additional features

In addition to the features described above, it is necessary to mention some additional functionality.

Data in buckets can be kept private. Google Cloud Storage can be accessed using the file access method, which allows, for example, to use a copy command from the local file directory to Google Cloud Storage. Cloud storage can also be accessed via the web at: storage.cloud.google.com. The site uses TLS or HTTPS to transport data, consequently protecting credentials as well as data in transit.

Cloud storage has many other object management features. For example, you can set a retention policy on all objects in a bucket that provides for automatic deletion of the object after a predetermined period. You can also use versioning to track all changes made to an object so that old versions can be retrieved if necessary. You can also manage the lifecycle of objects. For example, you can set up an automatic move of objects after 30 days from their consultation in the nearline storage class, or after 90 days in the coldline storage class. This optimizes costs.