Until a few years ago, configuring a server for one or more services was a difficult, time-consuming task with many pitfalls. For example, making two web applications based on different programming languages and/or different libraries coexist could cause some problems that undermined the correct functioning of the systems. In fact, it was not possible to create separate workspaces that did not interfere with each other since each service required something from the server’s operating system. In addition, updates to the server or programs could compromise the operation of one or more services.

So over the years, a system was developed that would provide a uniform abstraction from the underlying operating system, making it much easier for developers and system administrators to test and release programs and services.

Docker solves most of the problems we introduced earlier. This technology involves virtualization at the operating system level, also known as containers. Be careful not to confuse this type of virtualization with hardware virtualization.

Docker is available for Linux, macOS and Windows 10 platforms. For Windows and Mac, there is the Docker Desktop which is an easy-to-install application that allows you to build and share containerized applications and microservices. It also includes Docker Engine, Docker CLI client, Docker Compose, Notary, Kubernetes and Credential Helper. For Linux, the instructions differ depending on the distribution used. You can find all the details for each operating system in the official guide.

In this article we’ll introduce the basic concepts on which Docker is based. But if you want to start using it immediately I recommend the video “How to get started with Docker” presented at the DockerCon conference in 2020.

If you want instead to look for the book that best suits your needs these are some suggestions.

Docker engine

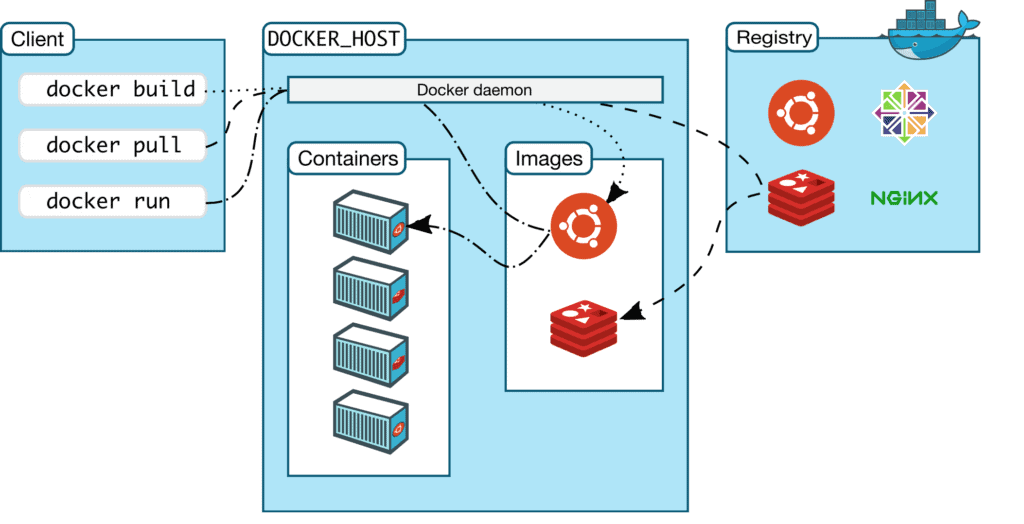

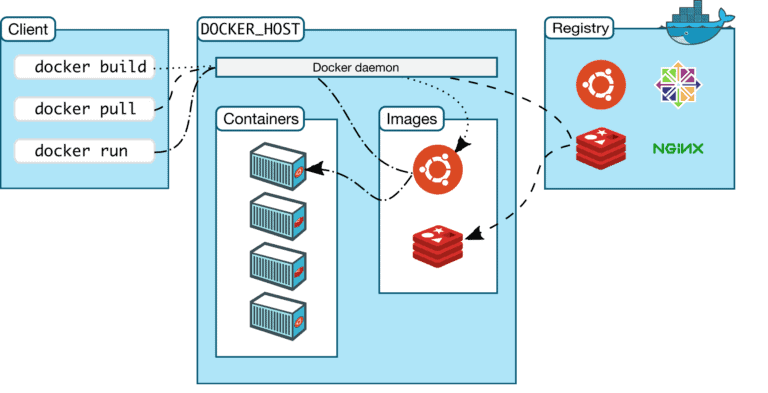

The Docker Engine is a client-server application consisting of:

- a server that is a daemon process (dockerd)

- a REST API that specifies the interfaces that programs can use to communicate with the daemon

- a command line interface (CLI) (docker)

Since a client-server architecture is used, the client instructs via the REST API the daemon that is responsible for building, managing and deploying Docker containers. They can run either on the same machine or on different machines.

A diagram of the architecture is shown in the figure.

Docker CLI

The Docker CLI is the primary way to interact with Docker. Through the REST API, the Docker CLI sends a request to the Docker daemon to execute a particular task. The most common commands are as follows:

docker build

docker pull

docker run

docker exec For a complete overview of all commands we refer you to the official guide.

Docker image

An image is a read-only template with instructions for creating a Docker container. Often an image is based on another image, with some additional customization. For example, you can build an image that is based on the ubuntu image, but installs the Apache web server and your own application, as well as the configuration details needed to make the application work.

The Docker image is a read-only template that forms the basis of any application developed with this technology. We could compare it to a shell script that prepares a system with the necessary settings and libraries. All Docker images are in turn based on another image. The base image is typically a linux operating system. To keep the image size as small as possible you usually use operating system packages like Alpine which is a Linux distribution of only 5MB.

You can create your own images or you can use only those created by others and published in a registry. There are many pre-built images for some of the most common application stacks such as Ruby on Rails, Django, PHP-FPM with nginx, and so on. To build your own image instead, you create a Dockerfile. As we will see later, the Dockerfile defines the steps needed to create an image and execute it. Each statement in a Dockerfile creates a layer in the image. When you edit the Dockerfile and rebuild the image, only the layers that have been modified are rebuilt. This is part of what makes images so lightweight, small, and fast when compared to other virtualization technologies. The image you create can then be shared publicly on the Docker Hub.

Container

When an image runs on a host, it generates a process called a container. Since Docker is written in the Go programming language, it takes advantage of several features of the Linux kernel to provide its main functionality. Among these is the use of namespace, which is used to isolate the workspace of each container. By default, a container is relatively well isolated from other containers and the host machine. However, you can define the level of isolation of the network, storage, or other underlying subsystems.

Using the Docker CLI commands, you can create, start, stop, move or delete a container. In addition, a container can be connected to one or more networks and/or storage.

Attention

An important aspect to understand is that when a container is running, changes are applied at the container level. Therefore, when the container is removed, any changes to its state are not saved. To overcome the loss of state, persistent storage is used by defining volumes.

If you are curious to see how to create a container from scratch in the Go language I recommend you to watch Liz Rice’s talk. While creating a simple container, you can understand how Docker works despite some aspects not being covered.

Dockerfile

A Dockerfile is a text document that contains in sequence all the commands that a user can call on the command line to assemble an image. To build an image you must run the command:

$ docker build A simple example of a Dockerfile is as follows:

FROM ubuntu:latest

LABEL author="flowygo"

LABEL description="An example Dockerfile"

RUN apt-get install python

COPY hello-world.py

CMD python hello-world.py However, it is possible to complicate the Dockerfile at will to create images with the desired characteristics.

In the table below we report the most used commands within the Dockerfile.

| Statement | Description |

|---|---|

| FROM | basic image to be used for further instructions |

| WORKDIR | sets the current working directory for the RUN, CMD, ENTRYPOINT, COPY and ADD statements |

| COPY | copies the files into the container |

| ADD | similar to COPY command supports extraction of archives and remote URLs |

| RUN | executes all commands in a new layer on top of the current image and will create a new layer available for the next steps in the Dockerfile |

| CMD | provides default values for a running container |

| ENTRYPOINT | indicates the command to execute at container startup |

| ENV | sets the image environment variables |

| VOLUME | creates a directory on the host that is mounted on a path specified within the instruction |

| LABEL | adds metadata to an image as a key/value pair |

| EXPOSE | specifies the network ports on which the container can receive |

| ONBUILD | is executed after the completion of the build of the current Dockerfile. ONBUILD is run on any child image derived from the current image |

To launch a container based on the new image just run the command

$ docker run To interact instead with the container you use the command

$ docker exec With this last command it is possible, for example, to open a shell inside the container or to execute a program. For every detail we refer you to the official documentation.

Guidelines and recommendations for writing Dockerfiles

To correctly write a Dockerfile you need to follow some guidelines.

Create ephemeral containers

The image defined by your Dockerfile should generate containers that are as “ephemeral” as possible. By “ephemeral” we mean that the container can be stopped and destroyed, then rebuilt and replaced with an absolute minimum of set up and configuration.

Exclude unnecessary files from the build context

In order not to increase the execution time for building a new image and thus a container, it is necessary to keep the context to a minimum. When you run the docker build command, unless otherwise specified, the current directory is used as the context for building the new image. To avoid including unnecessary files, and thereby increasing the size of the image, you can use the .dockerignore file.

Minimize layers

It is important to reduce the number of layers in the image. As of Docker 1.10 and above, only the RUN, COPY, and ADD statements create new layers. It is recommended to combine some commands together to reduce the number of layers created. Where possible, use multistage builds and copy only the necessary artifacts into the final image. This allows you to include applications or files needed in intermediate steps, but without increasing the size of the final image.

Use multistage builds

With multistage builds, you use multiple FROM statements in the Dockerfile. Each FROM statement can use a different image, and each one starts a new build phase. You can selectively copy artifacts from one phase to another, leaving anything you don’t want in the final image. This way the size of the final image will be reduced and will contain only the artifacts you need. For more details we refer you to the official documentation.

Do not install unnecessary packages

In order to reduce complexity, dependencies, file size and compilation time, it is recommended to install only the packages that are strictly necessary.

Insight: add a user to the docker group

On Linux server installations, the Docker daemon binds to a Unix socket instead of a TCP port. By default that Unix socket is owned by the root user and other users can access it only using sudo. The Docker daemon, therefore, always runs as the root user.

To avoid launching all Docker CLI commands as superuser, it is necessary to bind the user to the docker group. Usually this group is created at installation time. Otherwise you can add it with the command

$ sudo groupadd docker At this point you can add a user to the docker group

$ sudo usermod -aG docker $USER To see that the user’s inclusion was successful, you need to have the user log out of the current shell. After that, you can verify that you can run docker commands without sudo.

$ docker run hello-world The reported command downloads a test image and runs it in a container. When the container is executed, it prints an informational message and exits.

More details are available on this page.